Singapore’s Model AI Governance Framework: Shifting Perspectives in AI & Data

By now we all recognise that generative AI has the transformative potential to revolutionise industries from healthcare to entertainment, enhance creativity, and solve complex problems. A notable example is the release of GPT-4o, which has enabled real-time conversational translation and brought tutoring to the masses. However, for people and businesses to embrace this technology with confidence, a fair and trusted ecosystem around the data used and consumed is essential. The report Model AI Governance Framework for Generative AI, released by the AI Verify Foundation in collaboration with the Infocomm Media Development Authority (IMDA) - a statutory board under the Singapore Ministry of Communications - highlights this need, and outlines several key challenges currently facing the AI ecosystem.

Key Challenges Facing the AI Ecosystem

Access to High-Quality Data. AI model development requires vast quantities of high-quality data. For instance, GPT-4 from OpenAI was rumoured to have been trained on almost 60TB of data. Without access to such extensive datasets, AI systems are unable to function effectively.

Copyright Infringement. Training AI models on copyrighted material can lead to legal complications. A clear example is Scarlett Johansson's accusation against OpenAI for allegedly replicating her voice for GPT-4-O. Ensuring fair compensation and value transfer for data creators, similar to royalties for artists and authors, is crucial.

Personal Data Usage. The trusted use of personal data in AI is paramount. Policymakers must clearly articulate how personal data laws apply to AI technologies. There is a growing belief that Personally Identifiable Information (PII) should only be used for training if Privacy-Enhancing Technologies (PETs) have been applied.

Content Provenance Post-Training. Ensuring the provenance of AI-generated content through digital watermarking and cryptographic methods can provide verifiable integrity and authenticity. This enhances trust and accountability in AI systems. However, current solutions, such as those from Meta, can only be verified by the same company that encodes the watermark, indicating a need for more universally verifiable methods.

Valyu’s Approach to Responsible AI

Valyu is dedicated to providing trusted data to AI models and applications, effectively addressing the challenges highlighted earlier through our platform and tooling. We collaborate directly with data providers and content creators to ensure their rights are respected, enabling them to license their data to AI companies and applications. Smart contracts encoding governance policies enable us to ensure rights preserving data access to these datasets, and to identify and safeguard copyrighted materials, we are implementing a ContentID system that verify data uploaded to our platform, ensuring proper attribution to the creators.

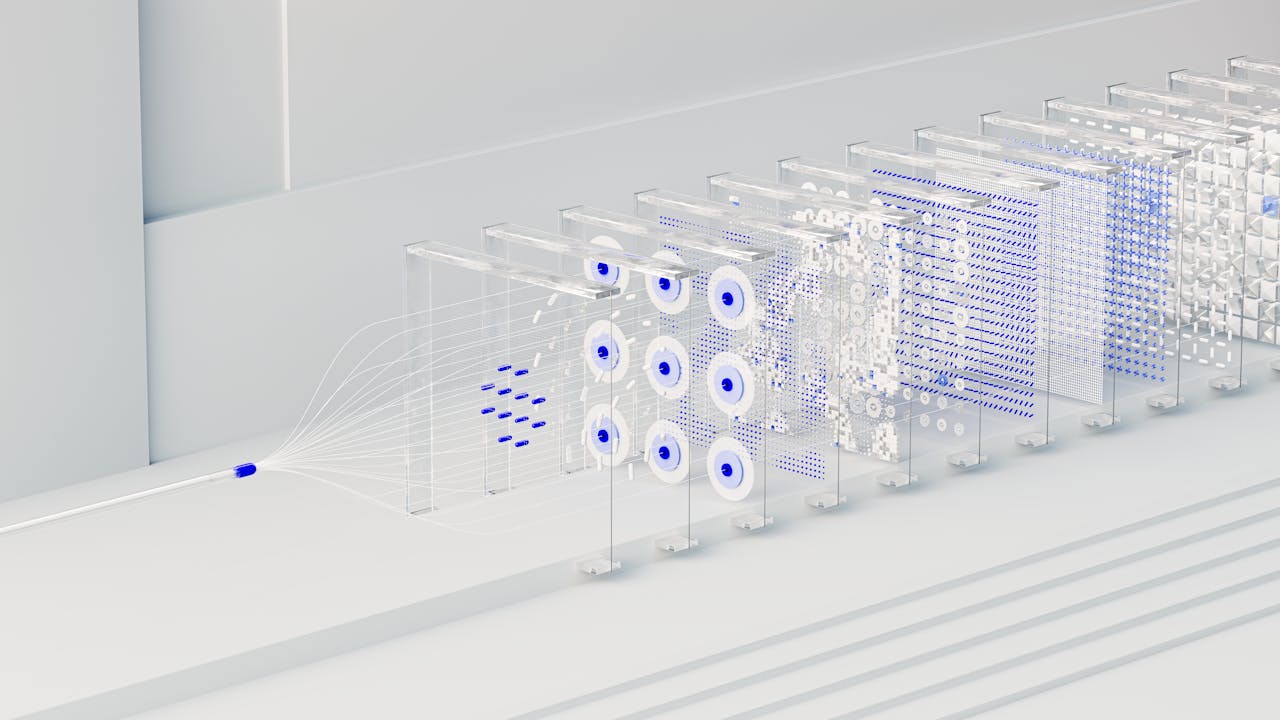

Our data quality pipelines play a crucial role in maintaining the integrity of the datasets on our platform. These pipelines assess the quality of the data, the underlying apparent quality to the data. For example in images, we analyse several factors including artefacts, noise, bias, toxicity and the underlying semantics present in the images but also the consistency and completeness. Additionally, we calculate the utility the data may have to the buyer, giving AI companies a clear understanding of the datasets they are purchasing. Our pipelines identify any Personally Identifiable Information (PII) and offer users the ability to mask sensitive data through our data synthesis tooling. For post-training revenue attribution, we are developing a framework that integrates with existing models, allowing models fine-tuned on a specific dataset to share revenue based on the most impactful data points per query, ensuring fair compensation for data creators.

Conclusion

Valyu's approach to addressing the key challenges in the AI ecosystem is a important step towards creating more responsible and sustainable AI development practices. By prioritising data quality, copyright protection, privacy preservation, and fair compensation, we are addressing current issues and paving the way for future advancements in AI data governance.

Ultimately, the future of AI depends not just on technological advancements, but on our ability to create and maintain ethical, transparent, and fair systems that benefit all of society. Infrastructure like ours is essential for solving current problems and building towards a future where AI can reach its full potential while respecting individual rights and fostering collective progress.

This article is written by Alexander Ng and Harvey Yorke.