Event Recap: Open Data, Research and Web Archiving in the Age of AI and LLMs

Last week, we hosted an event alongside the Common Crawl Foundation and UCL, bringing together researchers, academics, and industry experts. The focus was on the evolving landscape of open data and the critical role of web crawling in driving transparency and collaboration in research. In an era where AI and large language models (LLMs) are reshaping the way we engage with digital information, the event touched on both the promises and challenges of Open Data.

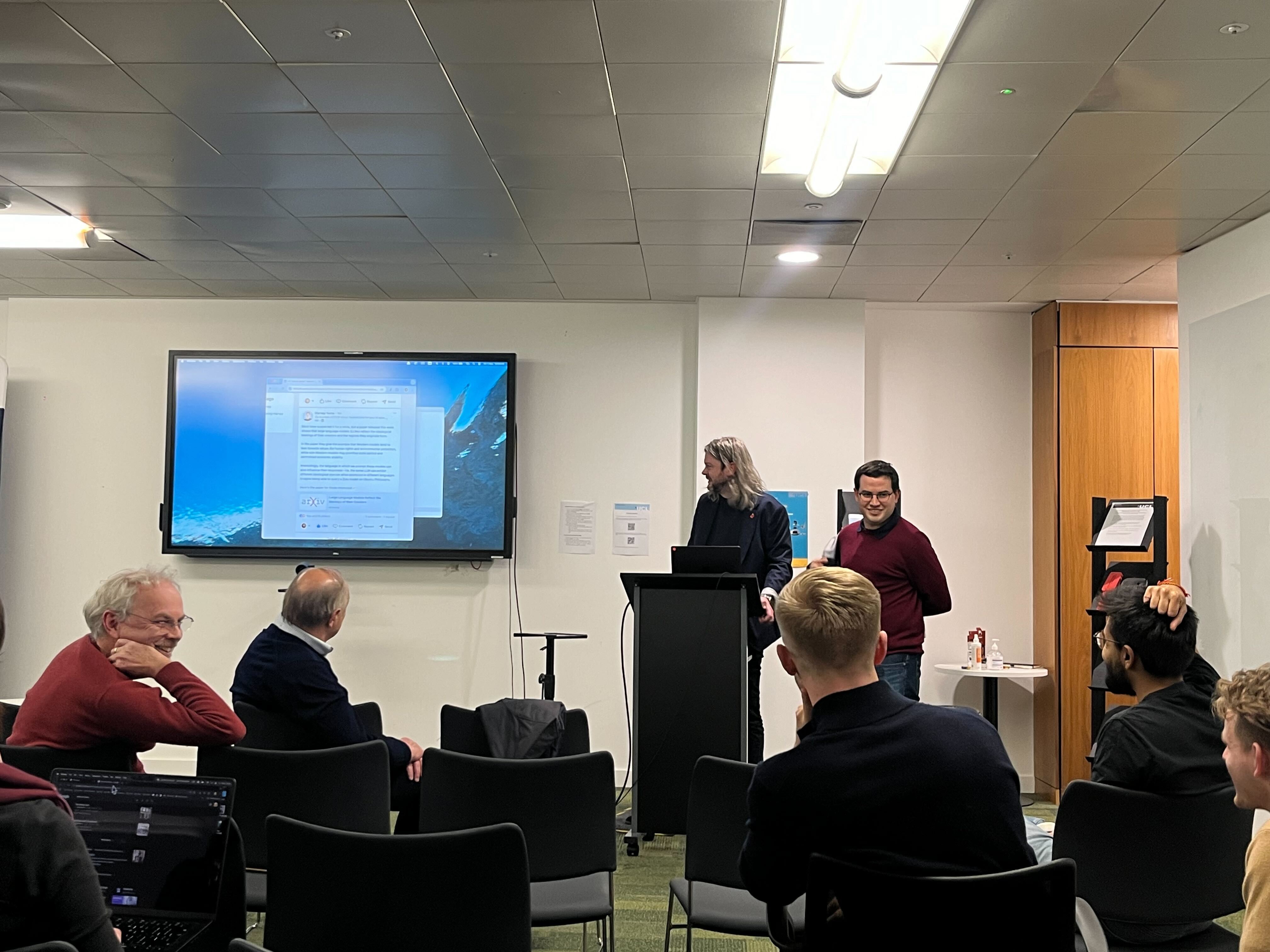

Thom Vaughan and Pedro Ortiz Suarez of Common Crawl presenting at the event.

Thom Vaughan and Pedro Ortiz Suarez from Common Crawl showcased examples of how Common Crawl’s extensive open dataset is being used across research, contributing to the advancements in not just AI but other areas of research such as social sciences. They also emphasised on strategies to enhance a sustainable open-data ecosystem.

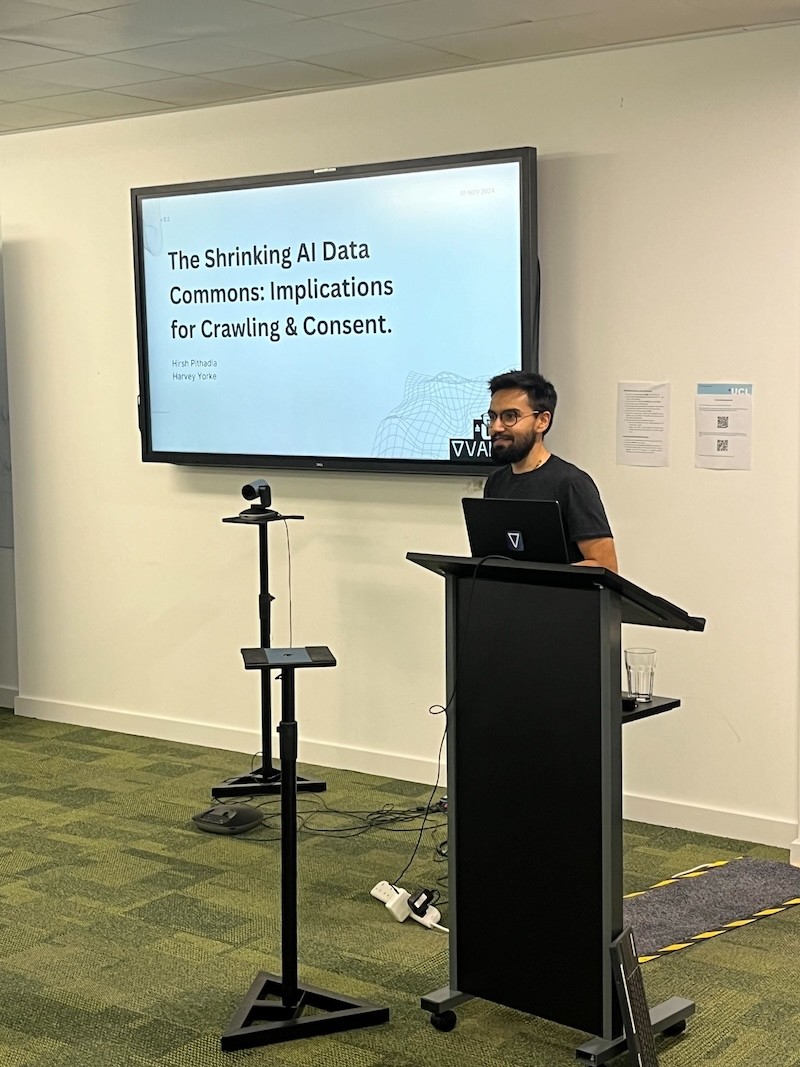

Valyu’s co-founders Hirsh Pithadia and Harvey Yorke addressed “opt-out” and the AI-induced web consent crisis and expanded on the implications of increased restrictions on data sharing, a trend that has escalated in recent years due to organisations and individuals seeking to protect their content from automated scraping for AI purposes. They noted that these restrictions are a response to concerns over attribution and monetisation in the AI era, but also touching concerns about the potential shift toward a closed internet, where content is increasingly hidden from crawlers and indexing.

Hirsh Pithadia, Valyu's Co-Founder

The event highlighted a pressing reality: as AI and LLMs continue to advance and evolve, open data is critical to ensure a fair and inclusive digital landscape. It empowers researchers, nonprofits, and smaller organisations by providing access to data resources. This, in turns boosts interdisciplinary collaboration and innovation that would otherwise be difficult to achieve in a restricted data environment. Yet, as publishers increasingly restrict access to their content, either by way of explicit bot blocking on the Robots Exclusion Protocol or through their Terms of Use, it raises the question of how we might create a more balanced and complementary ecosystem.

There needs to be consideration for fairer alternatives that could help support and acknowledge content creators, such as new approaches to compensation and attribution. By exploring sustainable and fair models for data access, we can aim for a balanced approach that values the contributions of publishers while maintaining open, inclusive access for research and innovation.